"Robots" is an affectionate name given to the search engine site crawlers that explore and index the Internet to create search engine results.

Sometimes, there are pages you don't want Google to index, and there are mechanisms that allow you to do this.

Hiding Static Pages from Google

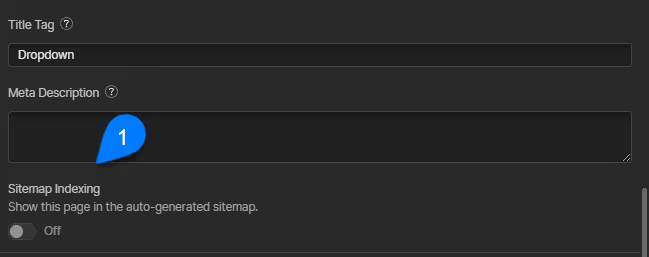

Webflow has added a static page setting called Sitemap Indexing. When this is toggled off, Webflow;

- Excludes the page from your sitemap.xml

- Adds a META noindex tag to the page, so robots know to ignore it for indexing

This feature is found just beneath your title and description settings.

Be careful not to confuse this with Site Search settings...

Hiding Collection Pages from Google

Unfortunately, Collection Pages do not have this setting available.

There you can hide either a specific Collection Page, or all pages in a template, using these approaches.

Hiding all Collection Pages from a Template

Let's suppose you have a News collection, and you want to hide all pages that are generated from Google.

To do that, you can place a special META tag in the <head> custom code of that template page.

This is the tag you need;

<meta name="robots" content="noindex">

Hiding an Individual Collection Page

This same idea can be extended to allow you to hide individual collection items.

- Add an option field to your CMS collection

- Give it two values,

indexandnoindex - Populate your items with the values you want

indexmeans it will appear in SERPSnoindexmeans it will be suppressed from SERPS

- Make that field required

Then, in your collection page template's HEAD custom code area, drop in the META tag-

<meta name="robots" content="">

Inside of the content attribute, between the double-quotes, insert your new option field.

You can now easily control each page's Google indexing individually.

What about robots.txt?

People often imagine that robots.txt is the answer to their Googlebot-exclusion needs, but it's typically not the right answer. Here are a few reasons why;

- It's easy to mess up robots.txt and break your site's SEO entirely

- Robots.txt tells bots what they are allowed to look at, and not what should be indexed. You may well see that page in SERPS, but with no title or description, just a URL.

- If the page has already been indexed, and you add it to robots.txt, the bot will be prevented from re-visiting it, and will never de-index it even if you add the META noindex to the page. It's not allowed to look at the page, therefore it can't see your META, therefore it doesn't act on it.

FAQs

Answers to frequently asked questions.